Understanding the 499 Error in Nginx

Nginx is a popular web server. It's known for its performance and stability. Yet, like all software, it can run into issues. One such issue is the 499 error.

What is a 499 Error?

A 499 error means the client closed the connection. This happens before the server could respond. It's unique to Nginx.

Why Does It Happen?

Several reasons can cause a 499 error. Here are the common ones:

- Network Issues: Unstable connections can drop requests.

- Client Timeout: The client might lose patience. If the server takes too long, the client gives up.

- Client Crashes: The client software might crash or close suddenly.

- User Action: Sometimes, users cancel the request. This can be by closing the browser or stopping the app.

How to Identify a 499 Error

Check your Nginx logs. You'll see entries with a status code of 499. These logs provide details. Look for patterns and frequencies.

Impact on Your Site

A 499 error can affect user experience. Users might see incomplete pages. This can lead to frustration. It's crucial to understand and fix the root cause.

Troubleshooting Steps

Here are steps to troubleshoot and reduce 499 errors:

1. Check Network Stability

Ensure that your server has a stable network connection. Unstable networks drop requests that can lead to 499 errors in Nginx.

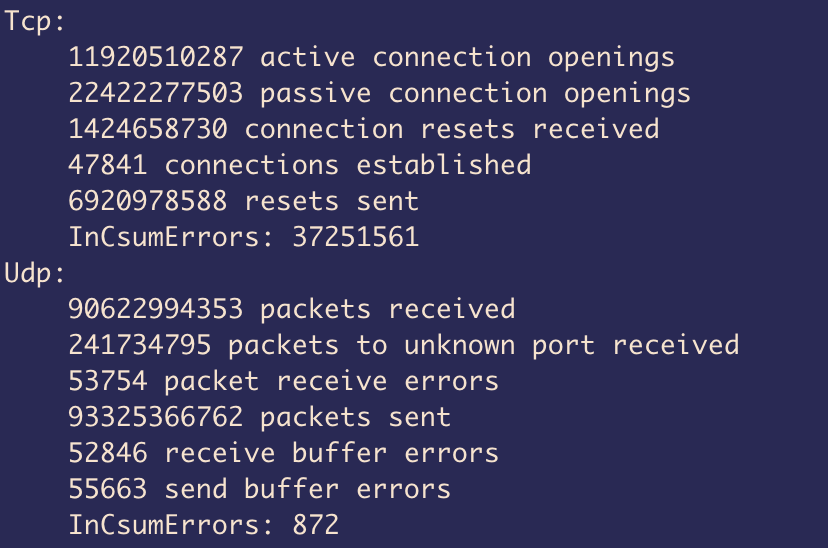

Check TCP statistics on the server:

netstat -s |grep -E 'failed connection attempts|segments received|segments sent out|segments retransmitted|bad segments received'

158476396 failed connection attempts

4955724369157 segments received

20667106951230 segments sent out

352486465012 segments retransmitted

39007293 bad segments received

Calculate the TCP retransmission rate using this formula:

segments retransmitted * 100 / (segments received + segments sent out)

The general rule is that a retransmission rate higher than 5 percent can be interpreted as a network issue. In this case, you should determine the bottleneck in your network using tools like ping, traceroute, or iperf.

2. Check Server Performance

Ensure your server handles requests well. Slow servers lead to client timeouts.

Check the load average on the server:

uptime

21:11:01 up 106 days, 10:23, 1 user, load average: 16.79, 24.84, 23.42

If any number in the load average row is higher than the number of cores on the server, then you need to consider adding more CPUs or upgrading the disk subsystem, depending on the exact bottleneck.

3. Handle Long Processes

Some processes take time. Handle them efficiently.

- Asynchronous Processing: Use async methods for long tasks.

- Background Jobs: Offload heavy tasks to background jobs.

4. Client-Side Optimization

Optimize the client-side to reduce dropped requests.

- Efficient Code: Ensure client code is efficient.

- Increase Timeouts: Increase connect and read timeouts on client side

- Error Handling: Implement robust error handling and retrying

Try Akmatori - A Globally Distributed TCP/UDP Load Balancer

Dealing with 499 errors can be challenging. But there’s a solution to enhance your site's performance and reliability. Try Akmatori, a globally distributed TCP/UDP load balancer. Akmatori ensures your requests are handled efficiently across the globe. It reduces latency, prevents timeouts, and improves user experience.

Benefits of Akmatori:

- Global Distribution: Your traffic is balanced across multiple regions.

- High Availability: Ensures your site is always up.

- Improved Performance: Reduces client timeouts and errors.

Conclusion

The 499 error in Nginx is client-driven. It happens when the client closes the connection early. By understanding its causes and impacts, you can take steps to reduce its occurrence. Regular monitoring and optimization are key. Keep your server, network, and client-side efficient. This will lead to a better user experience.