Integrating Autogen with Ollama to Build AI Agents

In this tutorial, you'll learn how to combine Autogen with Ollama's AI infrastructure to create interactive AI agents. This example sets up a weather chatbot, leveraging a simple function and Autogen's seamless agent orchestration tools.

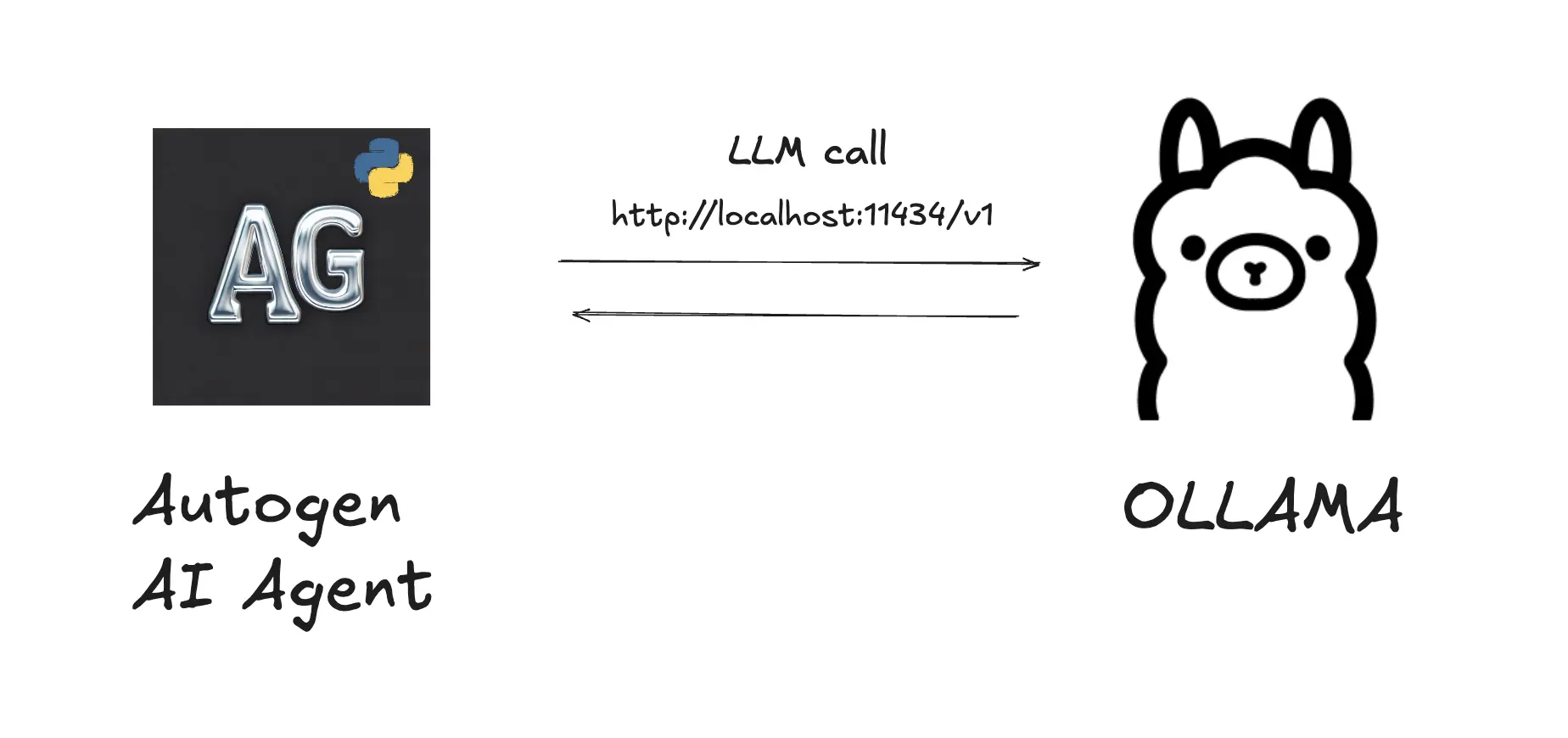

Why Combine Autogen with Ollama?

Autogen simplifies building agents with tools, memory, and multi-agent capabilities. Ollama enables locally hosted AI models for privacy and customization. Together, they create a secure and flexible AI system.

Requirements

Ensure you have these prerequisites:

- Python 3.8+

- Ollama installed and running locally

- LLM model that supports tools. Use the following command to download the

qwen2.5-coder:0.5bmodel:

ollama pull qwen2.5-coder:0.5b

- Required Python packages:

pip install autogen-agentchat autogen-ext[openai]

Example Code

Here’s how to build a weather chatbot using Autogen and Ollama.

Step 1: Define a Tool

A tool is a simple Python function that provides a specific capability. In this case, the function returns weather information for a given city:

async def get_weather(city: str) -> str:

return f"The weather in {city} is 73 degrees and Sunny."

Step 2: Create a Weather Agent

Define an agent using AssistantAgent. This agent uses Ollama's local model with the OpenAIChatCompletionClient class:

from autogen_agentchat.agents import AssistantAgent

from autogen_ext.models.openai import OpenAIChatCompletionClient

weather_agent = AssistantAgent(

name="weather_agent",

model_client=OpenAIChatCompletionClient(

model="qwen2.5-coder:0.5b",

model_info={

"vision": False,

"function_calling": True,

"json_output": True,

},

base_url="http://localhost:11434/v1" # Ollama API endpoint

),

tools=[get_weather],

)

Step 3: Orchestrate the Agent with a Team

To enable interaction, use RoundRobinGroupChat. This framework allows a team of agents to process and respond to user inputs:

from autogen_agentchat.teams import RoundRobinGroupChat

agent_team = RoundRobinGroupChat([weather_agent], max_turns=1)

Step 4: Build a Console Interface

A console-based interface lets users interact with the agent in real time:

from autogen_agentchat.ui import Console

async def main() -> None:

while True:

user_input = input("Enter a message (type 'exit' to leave): ")

if user_input.strip().lower() == "exit":

break

stream = agent_team.run_stream(task=user_input)

await Console(stream)

if __name__ == "__main__":

import asyncio

asyncio.run(main())

Step 5: Full Code Example

Here’s the complete Python script that integrates Autogen with Ollama to create an AI weather agent:

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_agentchat.ui import Console

from autogen_ext.models.openai import OpenAIChatCompletionClient

# Define a tool

async def get_weather(city: str) -> str:

return f"The weather in {city} is 73 degrees and Sunny."

async def main() -> None:

# Define an agent

weather_agent = AssistantAgent(

name="weather_agent",

model_client=OpenAIChatCompletionClient(

model="qwen2.5-coder:0.5b",

model_info={

"vision": False,

"function_calling": True,

"json_output": True,

},

base_url="http://localhost:11434/v1" # Ollama API endpoint

),

tools=[get_weather],

)

# Define a team with a single agent and maximum auto-gen turns of 1.

agent_team = RoundRobinGroupChat([weather_agent], max_turns=1)

while True:

# Get user input from the console.

user_input = input("Enter a message (type 'exit' to leave): ")

if user_input.strip().lower() == "exit":

break

# Run the team and stream messages to the console.

stream = agent_team.run_stream(task=user_input)

await Console(stream)

# Run the script

if __name__ == "__main__":

import asyncio

asyncio.run(main())

Step 6: Run the Script

To test your chatbot:

- Start Ollama:

ollama serve - Run the Python script:

python weather_chatbot.py

Enter a query like "What's the weather in Paris?" and watch your AI agent respond!

Key Features of This Integration

- Local AI Models: Ollama runs models locally, ensuring privacy.

- Easy Agent Customization: Autogen supports custom tools and workflows.

- Function Calling: Seamlessly integrates Python functions for real-world tasks.

Scale Your AI Efforts

Looking for a robust platform to enhance your AI infrastructure? Try Akmatori. Akmatori automates incident response, reduces downtime, and simplifies troubleshooting, ensuring uninterrupted AI workflows.

Additionally, deploy AI systems with cost-efficient servers from Gcore. Get high-performance virtual machines and bare-metal servers worldwide.

Conclusion

This guide showcased how to integrate Autogen with Ollama to create an AI agent. With this framework, you can build private, customizable, and efficient AI solutions tailored to your needs.

For more insights into AIOps and DevOps tools, explore our Akmatori blog.